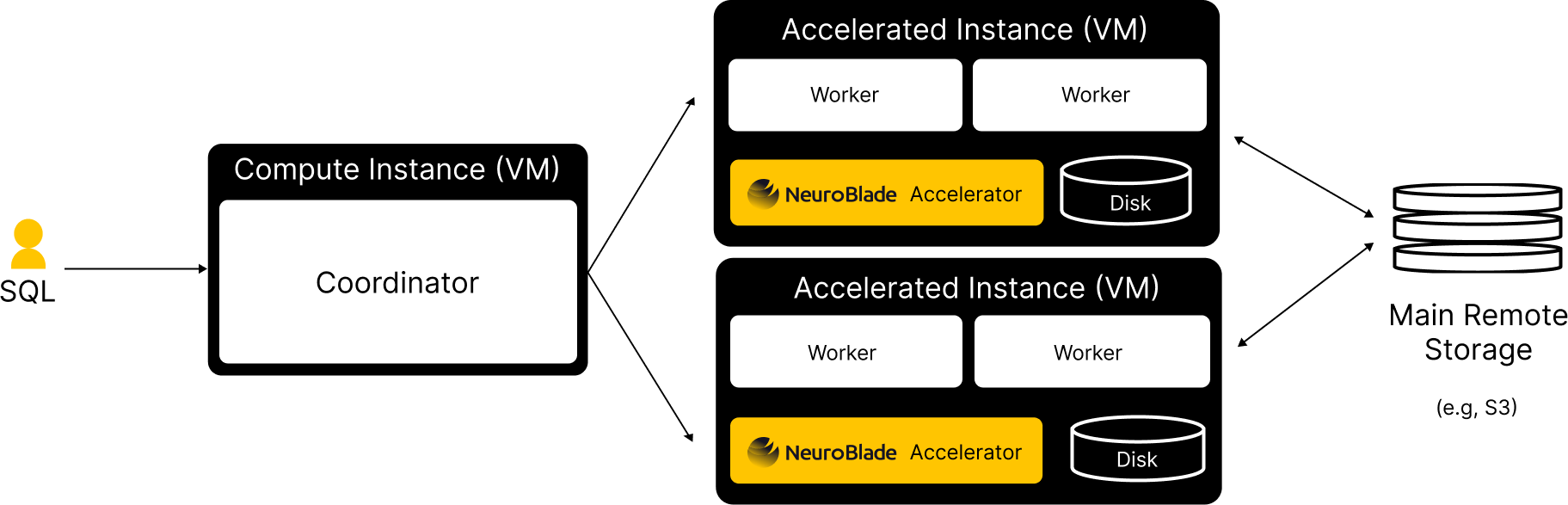

The NeuroBlade Accelerator offers a revolutionary approach to enahmcing the speed of data analytics workloads. Collaborating with leading Cloud Service Providers, NeuroBlade is making the Accelerator integrated in cloud instance to deliver more efficient solutions across Cloud and Serverless offerings. Available on selected cloud provider instances like AWS EC2 F2, the Accelerator integrates seamlessly with existing data processing frameworks, providing unmatched acceleration that complements current optimizations.

In a cloud-driven data landscape, access to Data Analytics-optimized instances is essential. The NeuroBlade accelerator, now available in managed cloud instances, brings advanced processing capabilities to cloud-based workloads, allowing organizations to achieve speed improvements and significant TCO savings. This capability is especially crucial for AI and machine learning (AI/ML) workloads, where efficiently processing vast amounts of data is paramount.

Optimized Performance

Achieve up to 50x faster query processing, optimized for high-speed analytics in a cloud environment.

Seamless Integration

Integrates smoothly with existing data analytics workflows, minimizing disruption and maximizing efficiency.

AI/ML Ready

Built for high-performance AI/ML workloads, accelerating data preparation and analysis.

Flexible Deployment

Available on select cloud provider instances, enabling simple deployment and scalability within cloud infrastructure.

TCO Efficiency

Cuts cloud infrastructure costs through efficient, hardware-accelerated processing.

Proven Value

Early deployment lets customers evaluate the substantial speed and cost benefits of NeuroBlade accelerator in a cloud setting.

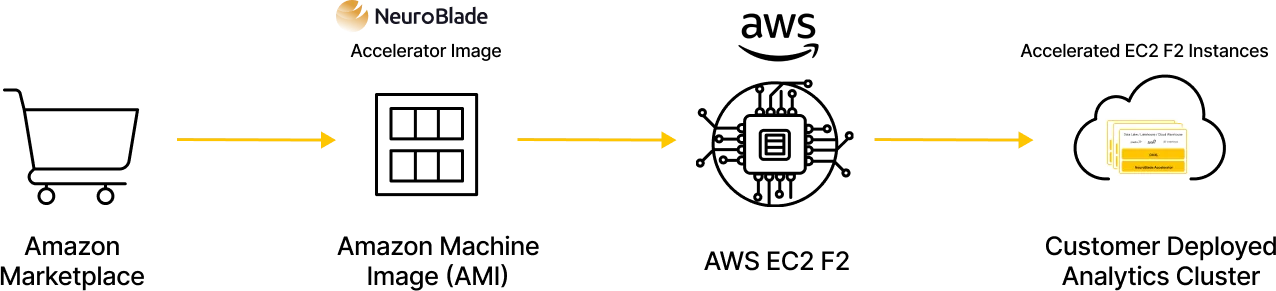

NeuroBlade’s data analytics acceleration technology is available on the AWS cloud via the newly released EC2 F2 instances. The availability of NeuroBlade’s Acceleration technology on EC2 F2 instances enables customers to quickly evaluate its benefits, including faster query processing, TCO savings, and the ability to enhance their services by utilizing more efficient data analytics processing.

| Instance Name | FPGAS | VCPU | FPGA Memory HB M / DDR4 | Instance Memory (GIB) | Local Storage (GIB) | Network Bandwidth (GBPS) | EBS Bandwidth (GBPS) |

| f2.6xlarge | 1 | 24 | 16 GiB / 64 GiB | 256 | 1x 950 | 12.5 Gbps | 7.5 Gbps |

Instance Name

f2.12xlarge

FPGAS

2

VCPU

48

Instance Memory (GIB)

512

Local Storage (GIB)

2x 950

Network Bandwidth (GBPS)

25 Gbps

EBS Bandwidth (GBPS)

15 Gbps

• Note: Please contact us for additional information on supported Instances configurations

FPGA-powered AWS EC2 F2 instances paired with NeuroBlade’s pre-built AMI enable seamless VM deployment via AWS Marketplace. Enjoy limitless scaling and a pay-as-you-go model.

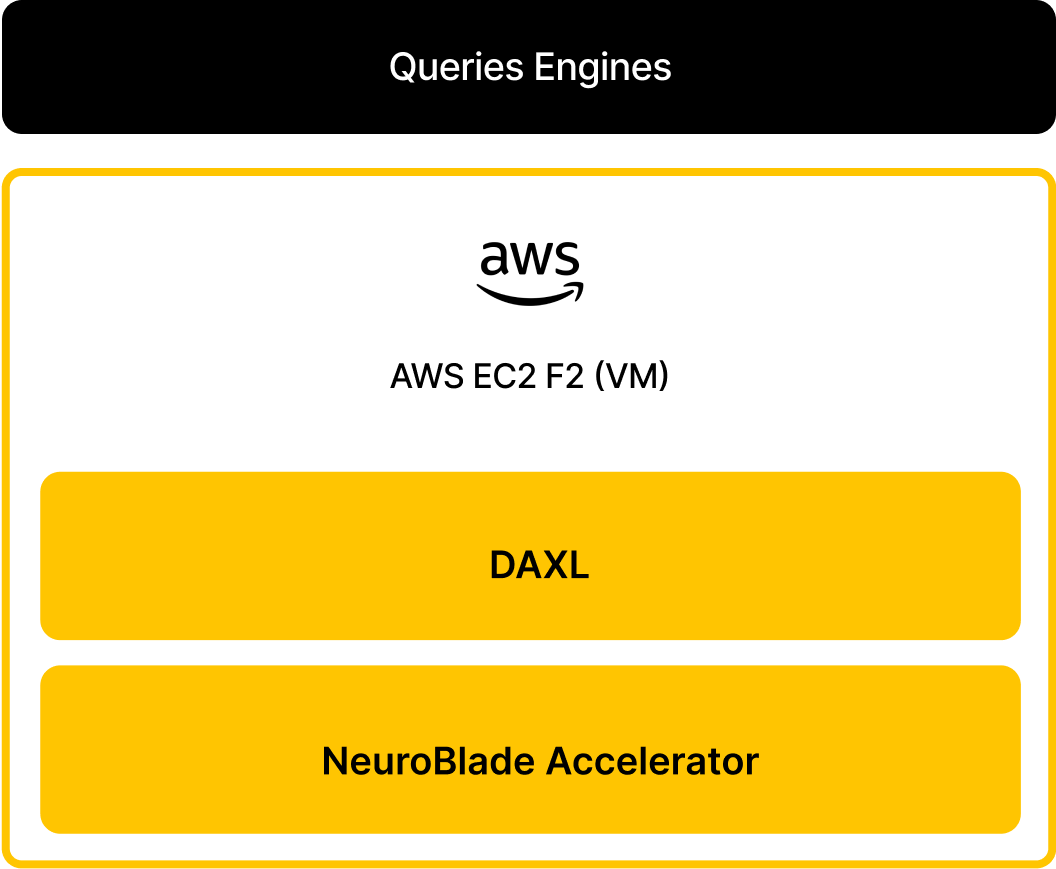

DAXL API integrates seamlessly with popular open-source query engines for analytical data processing like Apache Spark, Presto, and ClickHouse, enabling easy adoption with existing workflows

Note: Contact us for additional reference integration

with other open-source query engines

| DAXL SDK 🔒 | Get Access | |

| NeuroBlade Big Data Analytics White Paper | Download | |

| NeuroBlade TCO White Paper | Download |

This website stores cookies on your computer. These cookies are used to collect information about how you interact with our website and allow us to remember you. We use this information in order to improve and customize your browsing experience and for analytics and metrics about our visitors both on this website and other media. To find out more about the cookies we use, see our Privacy Policy